Google Pushes Real-Time Indexing as AI Arms Race Heats Up

Posted on Tuesday, June 24th, 2025 at 9:34 pm

Jeff Dean Makes the Case for Google’s Advantage

Jeff Dean, one of Google’s earliest hires and now the head of Google AI, has been vocal about a feature he says gives Google a real edge: index freshness. In a recent X post, Dean emphasized the years of work that have gone into keeping Google’s index up to date in real time. This comment was a pointed contrast to how other large language model providers handle search data.

He cited AI researcher Delip Rao, who warned developers about using closed-source AI tools like those from OpenAI or Anthropic. Rao explained that many of these systems rely on search indexes that aren’t updated frequently, leading to dead links and unreliable results. Dean’s response made clear that Google’s Gemini AI is different. Because it taps directly into Google’s live search index, it pulls fresher data and delivers fewer errors.

Index freshness is something I and many others at Google have worked on for many years. https://t.co/XEimydWqm7

— Jeff Dean (@JeffDean) June 20, 2025

Evolution of Index Freshness

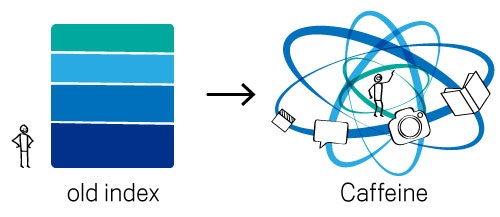

Google’s commitment to real-time indexing isn’t new. It dates back to at least 2009, when the company rolled out the Caffeine update—a major infrastructure change that reworked how Google crawled and indexed the web. Before Caffeine, content was indexed in batches. After the update, Google could process and serve new information almost instantly.

That speed remains a key advantage today. Whether users are interacting with AI Overviews in search, using Gemini, or relying on Google’s AI Mode, the system is pulling from a constantly updated index. This reduces the lag between when a page is published and when it becomes part of the AI’s knowledge base.

Google’s infrastructure prioritizing speed is part of a long-term strategy to ensure that AI outputs aren’t built on stale or missing data.

Competitors Are Gaining Ground

Microsoft moved early to connect its Bing search infrastructure with OpenAI’s models. Its launch of Copilot used Prometheus algorithms to improve the quality and freshness of AI responses by drawing directly from Bing’s live index.

That integration gave Microsoft an early lead. OpenAI, meanwhile, has been catching up. With significant funding at its disposal, it’s investing in better indexing and faster data integration. The difference in how these systems gather and update information is shrinking, and fast.

What once separated these platforms is becoming less defined as the technology improves on all sides.

Why This Matters for AI Tools Built on Search

AI systems that depend on stale indexes often produce broken links, missing context, and unreliable information. This has direct implications for tools being used across professional fields, including legal. When the source data isn’t current, even the most advanced models can produce responses that are misleading or outdated. It’s an issue that comes up often with closed systems that don’t pull from a real-time search index.

In contrast, platforms that connect to live indexes reduce these risks. As AI features become more embedded into research tools and client-facing services, the reliability of their outputs will depend on how quickly and accurately they can access updated content.

What Law Firms Should Take From This

Index freshness directly influences how content is found and displayed through AI-assisted search. Law firms using AI tools for marketing, intake, or research should take a close look at where those tools pull their information. If the source index isn’t consistently updated, the risk of inaccurate or missing content goes up.

TSEG monitors these changes closely. We help firms stay positioned across platforms that are starting to rely more heavily on real-time indexing. Whether it’s content strategy or technology consulting, we focus on keeping firms competitive as the digital environment continues to change. Let us help your firm today.